[ad_1]

This text is a part of a sequence associated to interpretable predictive fashions, on this case masking a mannequin sort referred to as Additive Resolution Bushes. The earlier described ikNN, an interpretable variation of kNN fashions, based mostly on ensembles of 2nd kNNs.

Additive Resolution Bushes are a variation of ordinary choice bushes, constructed in comparable method, however in a method that may usually enable them to be extra correct, extra interpretable, or each. They embody some nodes which can be considerably extra complicated than customary choice tree nodes (although normally simply barely), however may be constructed with, usually, far fewer nodes, permitting for extra understandable bushes general.

The primary venture is: https://github.com/Brett-Kennedy/AdditiveDecisionTree. Each AdditiveDecitionTreeClassifier and AdditiveDecisionTreeRegressor courses are offered.

Additive Resolution Bushes had been motivated by the shortage of choices for interpretable classification and regression fashions out there. Interpretable fashions are fascinating in quite a few situations, together with high-stakes environments, audited environments (the place we should perceive nicely how the fashions behave), instances the place we should make sure the fashions are usually not biased towards protected courses (for instance, discriminating based mostly on race or gender), amongst different locations.

As coated within the article on ikNN, there are some choices out there for interpretable classifiers and regression fashions (reminiscent of customary choice bushes, rule lists, rule units, linear/logistic regression and a small variety of others), however far fewer than one might need.

Commonplace Resolution Bushes

Probably the most commonly-used interpretable fashions is the choice tree. It usually works nicely, however not in all instances. They could not at all times obtain a adequate degree of accuracy, and the place they do, they might not at all times be moderately thought-about interpretable.

Resolution bushes can usually obtain excessive accuracy solely when grown to massive sizes, which eliminates any interpretability. A choice tree with 5 – 6 leaf nodes shall be fairly interpretable; a choice tree with 100 leaf nodes is near a black-box. Although arguably extra interpretable than a neural community or boosted mannequin, it turns into very tough to totally make sense of the predictions of choice bushes with very massive numbers of leaf nodes, particularly as every could also be related to fairly lengthy choice paths. That is the first difficulty Additive Resolution Bushes had been designed to deal with.

Additive Resolution Tree additionally addresses another well-known limitations of choice bushes, particularly their restricted stability (small variations within the coaching information can lead to fairly totally different bushes), their necessity to separate based mostly on fewer and fewer samples decrease within the bushes, repeated sub-trees, and their tendency to overfit if not restricted or pruned.

To think about nearer the difficulty the place splits are based mostly on fewer and few samples decrease within the bushes: that is as a result of nature of the splitting course of utilized by choice bushes; the dataspace is split into separate areas at every break up. The foundation node covers each report within the coaching information and every youngster node covers a portion of this. Every of their youngster nodes a portion of that and so forth. Given this, splits decrease within the tree turn into progressively much less dependable.

These limitations are sometimes addressed by ensembling choice bushes, both by bagging (as with Random Forests) or boosting (as with CatBoost, XGBoost, and LGBM). Ensembling leads to uninterpretable, although typically extra correct, fashions. Different strategies to make choice bushes extra secure and correct embody developing oblivious bushes (that is performed, for instance, inside CatBoost) and indirect choice bushes (bushes the place the splits could also be at indirect angles by the dataspace, versus the axis-parallel splits which can be usually used with choice bushes).

As choice bushes are seemingly essentially the most, or among the many most, generally used fashions the place interpretability is required, our comparisons, each when it comes to accuracy and interpretability, are made with respect to straightforward choice bushes.

Introduction to Additive Resolution Bushes

Additive Resolution Bushes won’t at all times carry out ideally to choice bushes, however will very often, and are normally value testing the place an interpretable mannequin is helpful. In some instances, they might present greater accuracy, in some cased improved interpretability, and in lots of instances, each. Testing up to now suggests that is extra true for classification than regression.

Additive Resolution Bushes are usually not supposed to be aggressive with approaches reminiscent of boosting or neural networks when it comes to accuracy, however are merely a instrument to generate interpretable fashions. Their attraction is that they’ll usually produce fashions comparable in accuracy to deeper customary choice bushes, whereas having a decrease general complexity in comparison with these, fairly often significantly decrease.

Instinct Behind Additive Resolution Bushes

The instinct behind Additive Resolution Bushes is that always the true perform, f(x), mapping the enter x to the goal y, relies on logical circumstances (with IF-ELSE logic, or may be approximated with IF-ELSE logic); and in different instances it’s merely a probabilistic perform the place every enter function could also be thought-about considerably independently (as with the Naive Bayes assumption).

The true f(x) can have various kinds of function interactions: instances the place the worth of 1 function impacts how different options relate to the goal. And these could also be stronger or weaker in numerous datasets.

For instance, the true f(x) might embody one thing to the impact:

True f(x) Instance 1

If A > 10 Then: y = class Y

Elseif B < 19 Then: y = class X

Elseif C * D > 44 Then: y = class Y

Else y = class Z

That is an instance of the primary case, the place the true f(x) consists of logical circumstances and could also be precisely (and in a easy method) represented as a sequence of guidelines, reminiscent of in a Resolution Tree (as beneath), Rule Listing, or Rule Set.

A > 10

| - LEAF: y = class Y

| - B > 19

| (subtree associated to C*D omitted)

| - LEAF: y = class X

Right here, a easy tree may be created to signify the foundations associated to options A and B.

However the rule associated to C*D will generate a really massive sub-tree, because the tree might solely break up based mostly on both C or D at every step. For instance, for values of C over 1.0, values of D over 44 will lead to class Y. For values of C over 1.1, values of D over 40 will lead to class Y. For values of C over 1.11, values over 39.64 will leads to class Y. This have to be calculated for all mixtures of C and D to as advantageous a degree of granularity as is feasible given the scale of the coaching information. The sub-tree could also be correct, however shall be massive, and shall be near incomprehensible.

Alternatively, the true f(x) could also be a set of patterns associated to chances, extra of the shape:

True f(x) Instance 2

The upper A is, the extra seemingly y is to be class X and fewer prone to be Z

no matter B, C, and DThe upper B is, the extra seemingly y is to be class Y and fewer prone to be X,

no matter A, C, and D

The decrease C is, the extra seemingly y is to be class Z and fewer prone to be X,

no matter A, B, and D

Right here, the courses are predicted completely based mostly on chances associated to every function, with no function interactions. On this type of perform, for every occasion, the function values every contribute some chance to the goal worth and these chances are summed to find out the general chance distribution.

Right here, there isn’t a easy tree that could possibly be created. There are three goal courses (X, Y, and Z). If f(x) had been less complicated, containing solely a rule associated to function A:

The upper A is, the extra seemingly y is to be class X and fewer prone to be Z

no matter B, C, and D

We might create a small tree based mostly on the break up factors in A the place every of the three courses turn into most probably. This will likely require solely a small variety of nodes: the tree would seemingly first break up A at roughly its midpoint, then every youngster node would break up A in roughly half once more and so forth, till we now have a tree the place the nodes every point out both X, Y, or Z because the most probably class.

However, given there are three such guidelines, it’s not clear which might be represented by splits first. If we, for instance, break up for function B first, we have to deal with the logic associated to options A and C in every subtree (repeating the logic associated to those a number of instances within the bushes). If we break up first based mostly on function B, then function A, then function C, then once we decide the break up factors for function C, we might have few sufficient data coated by the nodes that the break up factors are chosen at sub-optimal factors.

Instance 2 might seemingly (with sufficient coaching information) be represented by a choice tree with moderately excessive accuracy, however the tree could be fairly massive, and the splits would not going be intelligible. Decrease and decrease within the tree, the break up factors turn into much less and fewer understandable, as they’re merely the break up factors in one of many three related options that greatest break up the info given the progressively much less coaching information in every decrease node.

In Instance 3, we now have an identical f(x), however with some function interactions within the type of circumstances and multiplication:

True f(x) Instance 3

The upper A is, the extra seemingly y is to be class X,

no matter B, C and DThe upper B is, as much as 100.0, the extra seemingly y is class Y,

no matter A, C and D

The upper B is, the place B is 100.0 or extra, the extra seemingly y is to be class Z,

no matter A, C and D

The upper C * D is, the extra seemingly y is class X,

no matter A and B.

It is a mixture of the concepts in Instance 1 and Instance 2. Right here we now have each circumstances (based mostly on the worth of function B) and instances the place the function are independently associated to the chance of every goal class.

Whereas there are different means to taxonify capabilities, this method is helpful, and plenty of true capabilities could also be seen as some mixture of those, someplace between Instance 1 and Instance 2.

Commonplace choice bushes don’t explicitly assume the true perform is just like Instance 1 and might precisely (usually by using very massive bushes) seize non-conditional relationships reminiscent of these based mostly on chances (instances extra like Examples 2 or 3). They do, nonetheless, mannequin the capabilities as circumstances, which may restrict their expressive energy and decrease their interpretability.

Additive Resolution Bushes take away the belief in customary choice bushes that f(x) could also be greatest modeled as a set of circumstances, however does assist circumstances the place the info suggests they exist. The central concept is that the true f(x) could also be based mostly on logical circumstances, chances (additive, unbiased guidelines), or some mixture of those.

Basically, customary choice bushes might carry out very nicely (when it comes to interpretability) the place the true f(x) is just like Instance 1.

The place the true f(x) is just like Instance 2, we could also be higher to make use of a linear or logistic regression, Naive Bayes fashions, or GAM (Generalized Additive Mannequin), or different fashions that merely predict based mostly on a weighted sum of every unbiased function. Nonetheless, these fashions can wrestle with capabilities just like Instance 1.

Additive Resolution Bushes can adapt to each instances, although might carry out greatest the place the true f(x) is someplace between, as with Instance 3.

Establishing Additive Resolution Bushes

We describe right here how Additive Resolution Bushes are constructed. The method is easier to current for classification issues, and so the examples relate to this, however the concepts apply equally to regression.

The method taken by Additive Resolution Bushes is to make use of two kinds of break up.

First, the place applicable, it might break up the dataspace in the identical method as customary choice bushes. As with customary choice bushes, most nodes in an Additive Resolution Tree signify a area of the complete area, with the foundation representing the complete area. Every node splits this area in two, based mostly on a break up level in a single function. This leads to two youngster nodes, every masking a portion of the area coated by the dad or mum node. For instance, in Instance 1, we might have a node (the foundation node) that splits the info based mostly on Function A at 10. The rows the place A is lower than or equal to 10 would go to at least one youngster node and the rows the place A is larger than 10 would go to the opposite youngster node.

Second, in Additive Resolution Bushes, the splits could also be based mostly on an combination choice based mostly on quite a few potential splits (every are customary splits for a single function and break up level). That’s, in some instances, we don’t depend on a single break up, however assume there could possibly be quite a few options which can be legitimate to separate at a given node, and take the typical of splitting in every of those methods. When splitting on this method, there are not any different nodes beneath, so these turn into leaf nodes, often called Additive Nodes.

Establishing Additive Resolution Bushes is completed such that the primary sort of splits (customary choice tree nodes, based mostly on a single function) seem greater within the tree, the place there are bigger numbers of samples to base the splits on they usually could also be present in a extra dependable method. In these instances, it’s extra cheap to depend on a single break up on a single function.

The second sort (additive nodes, based mostly on aggregations of many splits) seem decrease within the tree, the place there are much less samples to depend on.

An instance, making a tree to signify Instance 3, might produce an Additive Resolution Tree reminiscent of:

if B > 100:

calculate every of and take the typical estimate:

if A <= vs > 50: calculate the possibilities of X, Y, and Z in each instances

if B <= vs > 150: calculate the possibilities of X, Y, and Z in each instances

if C <= vs > 60: calculate the possibilities of X, Y, and Z in each instances

if D <= vs > 200: calculate the possibilities of X, Y, and Z in each instances

else (B <= 100):

calculate every of and take the typical estimate:

if A <= vs > 50: calculate the possibilities of X, Y and Z in each instances

if B <= vs > 50: calculate the possibilities of X, Y and Z in each instances

if C <= vs > 60: calculate the possibilities of X, Y and Z in each instances

if D <= vs > 200: calculate the possibilities of X, Y and Z in each instances

On this instance, we now have a traditional node on the root, which is break up on function B at 100. Beneath that we now have two additive nodes (that are at all times leaf nodes). Throughout coaching, we might decide that splitting this node based mostly on options A, B, C, and D are all productive; whereas selecting one might seem to work barely higher than the others, it’s considerably arbitrary which is chosen. When coaching customary choice bushes, it’s fairly often an element of minor variations within the coaching information which is chosen.

To match this to a normal choice tree: a choice tree would choose one of many 4 doable splits within the first node and likewise one of many 4 doable splits within the second node. Within the first node, if it chosen, say, Function A (break up at 50), then this may break up this node into two youngster nodes, which may then be additional break up into extra youngster nodes and so forth. This may work nicely, however the splits could be decided based mostly on fewer and fewer rows. And it is probably not needed to separate the info into finer areas: the true f(x) might not have conditional logic.

On this case, the Additive Resolution tree examined the 4 doable splits and determined to take all 4. The predictions for these nodes could be based mostly on including the predictions of every.

One main benefit of that is: every of the 4 splits relies on the complete information out there on this node; every are as correct as is feasible given the coaching information on this node. We additionally keep away from a probably very massive sub-tree beneath this.

Reaching these nodes throughout prediction, we might add the predictions collectively. For instance if a report has values for A, B, C, and D of : (60, 120, 80, 120), then when it hits the primary node, we examine the worth of B (120) to the break up level 100. B is over 100, so we go to the primary node. Now, as an alternative of one other break up, there are 4 splits. We break up based mostly on the values in A, in B, in C, and in D. That’s, we calculate the prediction based mostly on all 4 splits. In every case, we get a set of chances for sophistication X, Y, and Z. We add these collectively to get the ultimate chances of every class.

The primary break up relies on A at break up level 50. The row has worth 60, so there are a set of chances for every goal class (X, Y, and Z) related to this break up. The second break up relies on B at break up level 150. B has worth 120, so there are one other set of chances for every goal class related to this break up. Related for the opposite two splits inside this additive node. We discover the predictions for every of those 4 splits and add them for the ultimate prediction for this report.

This offers, then, a easy type of ensembling inside a choice tree. We obtain the conventional advantages of ensembling: extra correct and secure predictions, whereas really growing interpretability.

This will likely seem to create a extra complicated tree, and in a way it does: the additive nodes are extra complicated than customary nodes. However, the additive nodes are inclined to combination comparatively few splits (normally about two to 5). And, in addition they take away the necessity for a really massive variety of nodes beneath them. The web discount in complexity is commonly fairly vital.

Interpretability with Commonplace Resolution Bushes

In customary choice bushes, international explanations (explanations of the mannequin itself) are introduced because the tree: we merely render not directly (reminiscent of scikit-learn’s plot_tree() or export_text() strategies). This enables us to grasp the predictions that shall be produced for any unseen information.

Native explanations (explanations of the prediction for a single occasion) are introduced as the choice path: the trail from the foundation to the leaf node the place the occasion ends, with every break up level on the trail resulting in this closing choice.

The choice paths may be tough to interpret. The choice paths may be very lengthy, can embody nodes that aren’t related to the present prediction, and which can be considerably arbitrary (the place one break up was chosen by the choice tree throughout coaching, there could also be a number of others which can be equally legitimate).

Interpretability of Additive Resolution Bushes

Additive choice bushes are interpreted within the largely identical method as customary choice bushes. The one distinction is additive nodes, the place there are a number of splits versus one.

The utmost variety of splits aggregated collectively is configurable, however 4 or 5 is often adequate. Typically, as nicely, all splits agree, and just one must be introduced to the consumer. And actually, even the place the splits disagree, the bulk prediction could also be introduced as a single break up. Subsequently, the reasons are normally comparable as these for traditional choice bushes, however with shorter choice paths.

This, then, produces a mannequin the place there are a small variety of customary (single) splits, ideally representing the true circumstances, if any, within the mannequin, adopted by additive nodes, that are leaf nodes that common the predictions of a number of splits, offering extra strong predictions. This reduces the necessity to break up the info into progressively smaller subsets, every with much less statistical significance.

Pruning Algorithm

Additive choice bushes first assemble customary choice bushes. They then run a pruning algorithm to attempt to cut back the variety of nodes: by combining many customary nodes right into a single node (an Additive Node) that aggregates predictions. The concepts is: the place there are numerous nodes in a tree, or a sub-tree inside a tree, this can be due the the tree trying to slim in on a prediction, whereas balancing the affect of many options.

The algorithm behaves equally to most pruning algorithms, beginning on the backside, on the leaves, and dealing in the direction of the foundation node. At every node, a choice is made to both depart the node as is, or convert it to an additive node; that’s, a node combining a number of information splits.

At every node, the accuracy of the tree is evaluated on the coaching information given the present break up, then once more treating this node as an additive node. If the accuracy is greater with this node set as an additive node, it’s set as such, and all nodes beneath it eliminated. This node itself could also be later eliminated, if a node above it’s transformed to an additive node. Testing signifies a really vital proportion of sub-trees profit from being aggregated on this method.

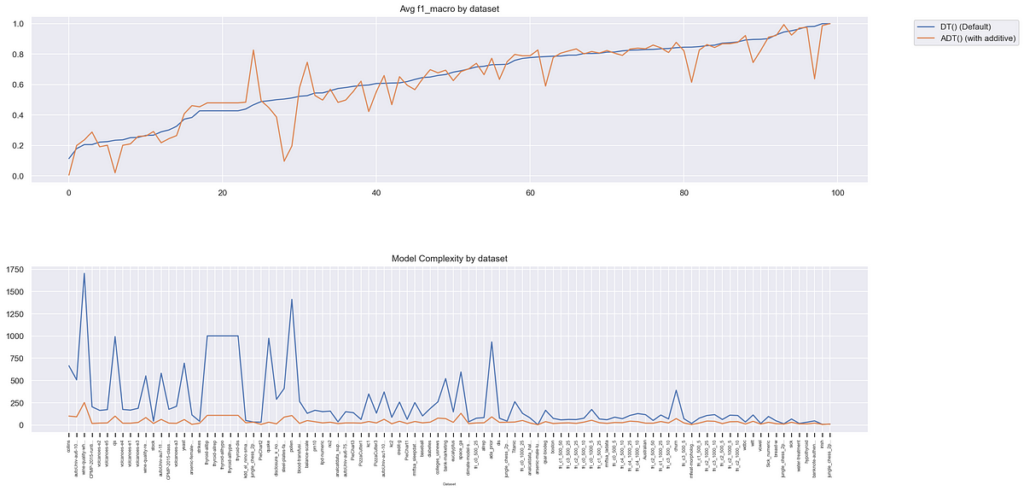

To guage the effectiveness of the instrument we thought-about each accuracy (macro f1-score for classification; and normalized root imply squared error (NRMSE) for regression) and interpretability, measured by the scale of the tree. Particulars concerning the complexity metric are included beneath. Additional particulars in regards to the analysis checks are offered on the github web page.

To guage, we in comparison with customary choice bushes, evaluating the place each fashions used default hyperparameters, and once more the place each fashions used a grid search to estimate the most effective parameters. 100 datasets chosen randomly from OpenML had been used.

This used a instrument referred to as DatasetsEvaluator, although the experiments may be reproduced simply sufficient with out this. DatasetsEvaluator is just a handy instrument to simplify such testing and to take away any bias deciding on the check datasets.

Outcomes for classification on 100 datasets

Right here ‘DT’ refers to scikit-learn choice bushes and ‘ADT’ refers to Additive Resolution Bushes. The Prepare-Check Hole was discovered subtracting the F1 macro rating on check set from that on the prepare set, and is used to estimate overfitting. ADT fashions suffered significantly much less from over-fitting.

Additive Resolution Bushes did similar to customary choice bushes with respect to accuracy. There are lots of instances the place customary Resolution Bushes do higher, the place Additive Resolution Bushes do higher, and the place they do about the identical. The time required for ADT is longer than for DT, however nonetheless very small, averaging about 4 seconds.

The key distinction is within the complexity of the generated bushes.

The next plots examine the accuracy (prime pane) and complexity (backside pane), over the 100 datasets, ordered from lowest to highest accuracy with a normal choice tree.

The highest plot tracks the 100 datasets on the x-axis, with F1 rating (macro) on y-axis. Greater is healthier. We are able to see, in the direction of the precise, the place each fashions are fairly correct. To the left, we see a number of instances the place DT festivals poorly, however ADT a lot better when it comes to accuracy. We are able to additionally see, there are a number of instances the place, when it comes to accuracy, it’s clearly preferable to make use of customary choice bushes and several other instances the place it’s clearly preferable to make use of Additive Resolution Bushes. Typically, it might be greatest to strive each (in addition to different mannequin sorts).

The second plot tracks the identical 100 datasets on the x-axis, and mannequin complexity on the y-axis. Decrease is healthier. On this case, ADT is constantly extra interpretable than DT, a minimum of utilizing the present complexity metric used right here. In all 100 instances, the bushes produced are less complicated, and steadily a lot less complicated.

Additive Resolution Bushes comply with the usual sklearn fit-predict API framework. We sometimes, as on this instance, create an occasion, name match() and name predict().

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from AdditiveDecisionTree import AdditiveDecisionTreeClasssifieriris = load_iris()

X, y = iris.information, iris.goal

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0)

adt = AdditiveDecisionTreeClasssifier()

adt.match(X_train, y_train)

y_pred_test = adt.predict(X_test)

The github web page additionally offers instance notebooks masking fundamental utilization and analysis of the mannequin.

Additive Resolution Bushes present two further APIs to make interpretability better: output_tree() and get_explanations(). output_tree() offers a view of a choice tree just like in scikit-learn utilizing export_text(), although offers considerably extra info.

get_explanations offers the native explanations (within the type of the choice paths) for a specified set of rows. Right here we get the reasons for the primary 5 rows.

exp_arr = adt.get_explanations(X(:5), y(:5))

for exp in exp_arr:

print("n")

print(exp)

The reason for the primary row is:

Preliminary distribution of courses: (0, 1): (159, 267)Prediction for row 0: 0 -- Appropriate

Path: (0, 2, 6)

imply concave factors is larger than 0.04891999997198582

(has worth: 0.1471) --> (Class distribution: (146, 20)

AND worst space is larger than 785.7999877929688

(has worth: 2019.0) --> (Class distribution: (133, 3)

the place the bulk class is: 0

From the primary line we see there are two courses (0 and 1) and there are 159 situations of sophistication 0 within the coaching information and 267 of sophistication 1.

The foundation node is at all times node 0. This row is taken by nodes 0, 2, and 6, based mostly on its values for ‘imply concave factors’ and ‘worst space’. Details about these nodes may be discovered calling output_tree(). On this case, all nodes on the trail are customary choice tree nodes (none are additive nodes).

At every stage, we see the counts for each courses. After the primary break up, we’re in a area the place class 0 is most probably (146 to twenty). After one other break up, class 0 is much more seemingly (133 to three).

The subsequent instance exhibits an instance of a prediction for a row that goes by an additive node (node 3).

Preliminary distribution of courses: (0, 1): (159, 267)Prediction for row 0: 1 -- Appropriate

Path: (0, 1, 3)

imply concave factors is lower than 0.04891999997198582

(has worth: 0.04781) --> (Class distribution: (13, 247)

AND worst radius is lower than 17.589999198913574

(has worth: 15.11) --> (Class distribution: (7, 245)

AND vote based mostly on:

1: imply texture is lower than 21.574999809265137

(with worth of 14.36) --> (class distribution: (1, 209))

2: space error is lower than 42.19000053405762

(with worth of 23.56) --> (class distribution: (4, 243))

The category with essentially the most votes is 1

The final node is an additive node, based mostly on two splits. In each splits, the prediction is strongly for sophistication 1 (1 to 209 and 4 to 243). Accordingly, the ultimate prediction is class 1.

The analysis above relies on the worldwide complexity of the fashions, which is the general dimension of the bushes, mixed with the complexity of every node.

It’s additionally legitimate to take a look at the typical native complexity (complexity of every choice path: the size of the paths mixed with the complexity of the nodes on the choice paths). Utilizing the typical native complexity can also be a sound metric, and ADT does nicely on this regard as nicely. However, for simplicity, we glance right here the worldwide complexity of the fashions.

For normal choice bushes, the analysis merely makes use of the variety of nodes (a typical metric for choice tree complexity, although others are generally used, for instance variety of leaf nodes). For additive bushes, we do that as nicely, however for every additive node, rely it as many instances as there are splits aggregated collectively at this node.

We, due to this fact, measure the entire variety of comparisons of function values to thresholds (the variety of splits) regardless if these are in a number of nodes or a single node. Future work will contemplate further metrics.

For instance, in a normal node we might have a break up reminiscent of Function C > 0.01. That counts as one. In an additive node, we might have a number of splits, reminiscent of Function C > 0.01, Function E > 3.22, Function G > 990. That counts as three. This seems to be a wise metric, although it’s notoriously tough and subjective to attempt to quantify the cognitive load of various types of mannequin.

In addition to getting used as interpretable mannequin, Additive Resolution Bushes can also be thought-about a helpful XAI (Explainable AI) instrument — Additive Resolution Bushes could also be used as proxy fashions, and so present explanations of black-box fashions. It is a frequent approach in XAI, the place an interpretable mannequin is educated to foretell the output of a black-box mannequin. Doing this the proxy fashions can present understandable, although solely approximate, explanations of the predictions produced by black-box fashions. Usually, the identical fashions which can be applicable to make use of as interpretable fashions can also be used as proxy fashions.

For instance, if an XGBoost mannequin is educated to foretell a sure goal (eg inventory costs, climate forecasts, buyer churn, and many others.), the mannequin could also be correct, however we might not know why the mannequin is making the predictions it’s. We are able to then prepare an interpretable mannequin (together with customary choice tree, Additive Resolution Tree, ikNN, GAM, and so forth) to foretell (in an interpretable method) the predictions of the XGBoost. This gained’t work completely, however the place the proxy mannequin is ready to predict the conduct of the XGBoost mannequin moderately precisely, it offers explanations which can be normally roughly right.

The supply code is offered in a single .py file, AdditiveDecisionTree.py, which can be included in any venture. It makes use of no non-standard libraries.

Although the ultimate bushes could also be considerably extra complicated than an customary choice tree of equal depth, Additive Resolution Bushes are extra correct than customary choice bushes of equal depth, and less complicated than customary choice bushes of equal accuracy.

As with all interpretable fashions, Additive Resolution Bushes are usually not supposed to be aggressive when it comes to accuracy with cutting-edge fashions for tabular information reminiscent of boosted fashions. Additive Resolution Bushes are, although, aggressive with most different interpretable fashions, each when it comes to accuracy and interpretability. Whereas nobody instrument shall be greatest, the place interpretability is necessary, is is normally value attempting a number of instruments, together with Additive Resolution Bushes.

All photographs are by writer.

[ad_2]

Source link