[ad_1]

Fairly often in when engaged on classification or regression issues in machine studying, we’re strictly concerned with getting essentially the most correct mannequin we will. In some circumstances, although, we’re additionally within the interpretability of the mannequin. Whereas fashions like XGBoost, CatBoost, and LGBM might be very robust fashions, it may be troublesome to find out why they’ve made the predictions they’ve, or how they may behave with unseen information. These are what are referred to as black-box fashions, fashions the place we don’t perceive particularly why the make the predictions they do.

In lots of contexts that is superb; as long as we all know they’re fairly correct more often than not, they are often very helpful, and it’s understood they are going to be incorrect from time to time. For instance, on a web site, we could have a mannequin that predicts which adverts will likely be more than likely to generate gross sales if proven to the present consumer. If the mannequin behaves poorly on the uncommon event, this will have an effect on revenues, however there are not any main points; we simply have a mannequin that’s sub-optimal, however typically helpful.

However, in different contexts, it may be crucial to know why the fashions make the predictions that they do. This contains high-stakes environments, corresponding to in medication and safety. It additionally contains environments the place we have to guarantee there are not any biases within the fashions associated to race, gender or different protected lessons. It’s vital, as properly, in environments which are audited: the place it’s obligatory to know the fashions to find out they’re performing as they need to.

Even in these circumstances, it’s typically attainable to make use of black-box fashions (corresponding to boosted fashions, neural networks, Random Forests and so forth) after which carry out what is named post-hoc evaluation. This supplies a proof, after the actual fact, of why the mannequin doubtless predicted because it did. That is the sphere of Explainable AI (XAI), which makes use of strategies corresponding to proxy fashions, function importances (e.g. SHAP), counterfactuals, or ALE plots. These are very helpful instruments, however, all the pieces else equal, it’s preferable to have a mannequin that’s interpretable within the first place, not less than the place attainable. XAI strategies are very helpful, however they do have limitations.

With proxy fashions, we practice a mannequin that’s interpretable (for instance, a shallow choice tree) to study the conduct of the black-box mannequin. This may present some stage of clarification, however is not going to at all times be correct and can present solely approximate explanations.

Function importances are additionally fairly helpful, however point out solely what the related options are, not how they relate to the prediction, or how they work together with one another to kind the prediction. Additionally they haven’t any potential to find out if the mannequin will work fairly with unseen information.

With interpretable fashions, we shouldn’t have these points. The mannequin is itself understandable and we will know precisely why it makes every prediction. The issue, although, is: interpretable fashions can have decrease accuracy then black-box fashions. They won’t at all times, however will typically have decrease accuracy. Most interpretable fashions, for many issues, is not going to be aggressive with boosted fashions or neural networks. For any given downside, it could be essential to strive a number of interpretable fashions earlier than an interpretable mannequin of enough accuracy might be discovered, if any might be.

There are a variety of interpretable fashions obtainable at present, however sadly, only a few. Amongst these are choice bushes, guidelines lists (and rule units), GAMs (Generalized Additive Fashions, corresponding to Explainable Boosted Machines), and linear/logistic regression. These can every be helpful the place they work properly, however the choices are restricted. The implication is: it may be inconceivable for a lot of tasks to seek out an interpretable mannequin that performs satisfactorily. There might be actual advantages in having extra choices obtainable.

We introduce right here one other interpretable mannequin, referred to as ikNN, or interpretable okay Nearest Neighbors. That is primarily based on an ensemble of second kNN fashions. Whereas the concept is easy, it is usually surprisingly efficient. And fairly interpretable. Whereas it isn’t aggressive by way of accuracy with state-of-the-art fashions for prediction on tabular information corresponding to CatBoost, it could actually typically present accuracy that’s shut and that’s enough for the issue. It is usually fairly aggressive with choice bushes and different current interpretable fashions.

Curiously, it additionally seems to have stronger accuracy than plain kNN fashions.

The principle web page for the undertaking is: https://github.com/Brett-Kennedy/ikNN

The undertaking defines a single class referred to as iKNNClassifier. This may be included in any undertaking copying the interpretable_knn.py file and importing it. It supplies an interface in keeping with scikit-learn classifiers. That’s, we typically merely must create an occasion, name match(), and name predict(), just like utilizing Random Forest or different scikit-learn fashions.

Utilizing, beneath the hood, utilizing an ensemble of second kNN’s supplies an a variety of benefits. One is the traditional benefit we at all times see with ensembling: we get extra dependable predictions than when counting on a single mannequin.

One other is that second areas are simple to visualise. The mannequin at present requires numeric enter (as is the case with kNN), so all categorical options should be encoded, however as soon as that is performed, each second area might be visualized as a scatter plot. This supplies a excessive diploma of interpretability.

And, it’s attainable to find out essentially the most related second areas for every prediction, which permits us to current a small variety of plots for every report. This enables pretty easy in addition to full visible explanations for every report.

ikNN is, then, an fascinating mannequin, as it’s primarily based on ensembling, however really will increase interpretability, whereas the other is extra typically the case.

kNN fashions are less-used than many others, as they aren’t normally as correct as boosted fashions or neural networks, or as interpretable as choice bushes. They’re, although, nonetheless extensively used. They work primarily based on an intuitive concept: the category of an merchandise might be predicted primarily based on the category of many of the objects which are most just like it.

For instance, if we take a look at the iris dataset (as is utilized in an instance under), now we have three lessons, representing three kinds of iris. If we gather one other pattern of iris and want to predict which of the three kinds of iris it’s, we will take a look at essentially the most related, say, 10 data from the coaching information, decide what their lessons are, and take the commonest of those.

On this instance, we selected 10 to be the variety of nearest neighbors to make use of to estimate the category of every report, however different values could also be used. That is specified as a hyperparameter (the okay parameter) with kNN and ikNN fashions. We want set okay in order to make use of to an inexpensive variety of related data. If we use too few, the outcomes could also be unstable (every prediction is predicated on only a few different data). If we use too many, the outcomes could also be primarily based on another data that aren’t that related.

We additionally want a approach to decide that are essentially the most related objects. For this, not less than by default, we use the Euclidean distance. If the dataset has 20 options and we use okay=10, then we discover the closest 10 factors within the 20-dimensional area, primarily based on their Euclidean distances.

Predicting for one report, we might discover the ten closest data from the coaching information and see what their lessons are. If 8 of the ten are class Setosa (one of many 3 kinds of iris), then we will assume this row is more than likely additionally Setosa, or not less than that is one of the best guess we will make.

One difficulty with that is, it breaks down when there are lots of options, on account of what’s referred to as the curse of dimensionality. An fascinating property of high-dimensional areas is that with sufficient options, distances between the factors begin to grow to be meaningless.

kNN additionally makes use of all options equally, although some could also be way more predictive of the goal than others. The distances between factors, being primarily based on Euclidean (or typically Manhattan or different distance metrics) are calculated contemplating all options equally. That is easy, however not at all times the simplest, given many options could also be irrelevant to the goal. Assuming some function choice has been carried out, that is much less doubtless, however the relevance of the options will nonetheless not be equal.

And, the predictions made by kNN predictors are uninterpretable. The algorithm is sort of intelligible, however the predictions might be obscure. It’s attainable to checklist the okay nearest neighbors, which supplies some perception into the predictions, nevertheless it’s troublesome to see why a given set of data are essentially the most related, significantly the place there are lots of options.

The ikNN mannequin first takes every pair of options and creates a normal second kNN classifier utilizing these options. So, if a desk has 10 options, this creates 10 select 2, or 45 fashions, one for every distinctive pair of options.

It then assesses their accuracies with respect to predicting the goal column utilizing the coaching information. Given this, the ikNN mannequin determines the predictive energy of every second subspace. Within the case of 45 second fashions, some will likely be extra predictive than others. To make a prediction, the second subspaces recognized to be most predictive are used, optionally weighted by their predictive energy on the coaching information.

Additional, at inference, the purity of the set of nearest neighbors round a given row inside every second area could also be thought-about, permitting the mannequin to weight extra closely each the subspaces confirmed to be extra predictive with coaching information and the subspaces that seem like essentially the most constant of their prediction with respect to the present occasion.

Take into account two subspaces and a degree proven right here as a star. In each circumstances, we will discover the set of okay factors closest to the purpose. Right here we draw a inexperienced circle across the star, although the set of factors don’t really kind a circle (although there’s a radius to the kth nearest neighbor that successfully defines a neighborhood).

These plots every symbolize a pair of options. Within the case of the left plot, there’s very excessive consistency among the many neighbors of the star: they’re completely crimson. In the proper plot, there isn’t a little consistency among the many neigbhors: some are crimson and a few are blue. The primary pair of options seems to be extra predictive of the report than the second pair of options, which ikNN takes benefit of.

This method permits the mannequin to think about the affect all enter options, however weigh them in a fashion that magnifies the affect of extra predictive options, and diminishes the affect of less-predictive options.

We first show ikNN with a toy dataset, particularly the iris dataset. We load within the information, do a train-test cut up, and make predictions on the take a look at set.

from sklearn.datasets import load_iris

from interpretable_knn import ikNNClassifieriris = load_iris()

X, y = iris.information, iris.goal

clf = ikNNClassifier()

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.33, random_state=42)

clf.match(X_train, y_train)

y_pred = clf.predict(X_test)

For prediction, that is all that’s required. However, ikNN additionally supplies instruments for understanding the mannequin, particularly the graph_model() and graph_predictions() APIs.

For an instance of graph_model():

ikNN.graph_model(X.columns)

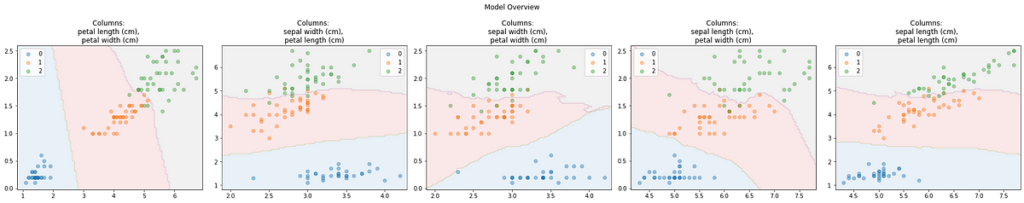

This supplies a fast overview of the dataspace, plotting, by default, 5 second areas. The dots present the lessons of the coaching information. The background coloration reveals the predictions made by the second kNN for every area of the second area.

The graph_predictions() API will clarify a particular row, for instance:

Right here, the row being defined is proven as a crimson star. Once more, by default, 5 plots are utilized by default, however for simplicity, this makes use of simply two. In each plots, we will see the place Row 0 is positioned relative to the coaching information and the predictions made by the 2D kNN for this 2D area.

Though it’s configurable, by default solely 5 second areas are utilized by every ikNN prediction. This ensures the prediction occasions are quick and the visualizations easy. It additionally implies that the visualizations are exhibiting the true predictions, not a simplification of the predictions, guaranteeing the predictions are fully interpretable

For many datasets, for many rows, all or virtually all second areas agree on the prediction. Nevertheless, the place the predictions are incorrect, it could be helpful to look at extra second plots with a view to higher tune the hyperparameters to go well with the present dataset.

A set of checks have been carried out utilizing a random set of 100 classification datasets from OpenML. Evaluating the F1 (macro) scores of normal kNN and ikNN fashions, ikNN had larger scores for 58 datasets and kNN for 42.

ikNN’s do even a bit higher when performing grid search to seek for one of the best hyperparameters. After doing this for each fashions on all 100 datasets, ikNN carried out one of the best in 76 of the 100 circumstances. It additionally tends to have smaller gaps between the practice and take a look at scores, suggesting extra secure fashions than commonplace kNN fashions.

ikNN fashions might be considerably slower, however they have a tendency to nonetheless be significantly sooner than boosted fashions, and nonetheless very quick, sometimes taking properly beneath a minute for coaching, normally solely seconds.

The github web page supplies some extra examples and evaluation of the accuracy.

Whereas ikNN is probably going not the strongest mannequin the place accuracy is the first aim (although, as with all mannequin, it may be from time to time), it’s doubtless a mannequin that ought to be tried the place an interpretable mannequin is important.

This web page offered the essential data obligatory to make use of the device. It merely essential to obtain the .py file (https://github.com/Brett-Kennedy/ikNN/blob/main/ikNN/interpretable_knn.py), import it into your code, create an occasion, practice and predict, and (the place desired), name graph_predictions() to view the reasons for any data you want.

All photos are by writer.

[ad_2]

Source link