[ad_1]

We discover novel video representations strategies which are outfitted with long-form reasoning functionality. That is half 1 specializing in video illustration as graphs and how you can be taught light-weights graph neural networks for a number of downstream purposes. Part II focuses on sparse video-text transformers. And Part III gives a sneak peek into our newest and biggest explorations.

Present video architectures are likely to hit computation or reminiscence bottlenecks after processing only some seconds of the video content material. So, how will we allow correct and environment friendly long-form visible understanding? An essential first step is to have a mannequin that virtually runs on lengthy movies. To that finish, we discover novel video representations strategies which are outfitted with long-form reasoning functionality.

What’s long-form reasoning and why ?

As we noticed the large leap of success of image-based understanding duties with deep studying fashions reminiscent of convolutions or transformers, the following step naturally turned going past nonetheless photographs and exploring video understanding. Creating video understanding fashions require two equally essential focus areas. First is a big scale video dataset and the second is the learnable spine for extracting video options effectively. Creating finer-grained and constant annotations for a dynamic sign reminiscent of a video shouldn’t be trivial even with one of the best intention from each the system designer in addition to the annotators. Naturally, the massive video datasets that have been created, took the comparatively simpler strategy of annotating on the complete video stage. In regards to the second focus space, once more it was pure to increase image-based fashions (reminiscent of CNN or transformers) for video understanding since movies are perceived as a set of video frames every of which is an identical in dimension and form of a picture. Researchers made their fashions that use sampled frames as inputs versus all of the video frames for apparent reminiscence finances. To place issues into perspective, when analyzing a 5-minute video clip at 30 frames/second, we have to course of a bundle of 9,000 video frames. Neither CNN nor Transformers can function on a sequence of 9,000 frames as an entire if it includes dense computations on the stage of 16×16 rectangular patches extracted from every video body. Thus most fashions function within the following means. They take a brief video clip as an enter, do prediction, adopted by temporal smoothing versus the perfect situation the place we would like the mannequin to have a look at the video in its entirety.

Now comes this query. If we have to know whether or not a video is of kind ‘swimming’ vs ‘tennis’, do we actually want to research a minute-worth content material? The reply is most definitely NO. In different phrases, the fashions optimized for video recognition, almost definitely realized to have a look at background and different spatial context info as an alternative of studying to motive over what is definitely taking place in a ‘lengthy’ video. We are able to time period this phenomenon as studying the spatial shortcut. These fashions have been good for video recognition duties usually. Are you able to guess how do these fashions generalize for different duties that require precise temporal reasoning reminiscent of motion forecasting, video question-answering, and just lately proposed episodic reminiscence duties? Since they weren’t educated for doing temporal reasoning, they turned out not fairly good for these purposes.

So we perceive that datasets / annotations prevented most video fashions from studying to motive over time and sequence of actions. Regularly, researchers realized this drawback and began arising with completely different benchmarks addressing long-form reasoning. Nonetheless, one drawback nonetheless continued which is usually memory-bound i.e. how will we even make the primary sensible stride the place a mannequin can take a long-video as enter versus a sequence of short-clips processed one after one other. To handle that, we suggest a novel video illustration methodology primarily based on Spatio-Temporal Graphs Studying (SPELL) to equip the mannequin with long-form reasoning functionality.

Let G = (V, E) be a graph with the node set V and edge set E. For domains reminiscent of social networks, quotation networks, and molecular construction, the V and E can be found to the system, and we are saying the graph is given as an enter to the learnable fashions. Now, let’s contemplate the best attainable case in a video the place every of the video body is taken into account a node resulting in the formation of V. Nonetheless, it isn’t clear whether or not and the way node t1 (body at time=t1) and node t2 (body at time=t2) are linked. Thus, the set of edges, E, shouldn’t be offered. With out E, the topology of the graph shouldn’t be full, ensuing into unavailability of the “floor fact” graphs. Probably the most essential challenges stays how you can convert a video to a graph. This graph will be thought of as a latent graph since there is no such thing as a such labeled (or “floor fact”) graph obtainable within the dataset.

When a video is modeled as a temporal graph, many video understanding issues will be formulated as both node classification or graph classification issues. We make the most of a SPELL framework for duties reminiscent of Motion Boundary Detection, Temporal Motion Segmentation, Video summarization / spotlight reels detection.

Video Summarization : Formulated as a node classification drawback

Right here we current such a framework, particularly VideoSAGE which stands for Video Summarization with Graph Illustration Studying. We leverage the video as a temporal graph strategy for video highlights reel creation utilizing this framework. First, we convert an enter video to a graph the place nodes correspond to every of the video frames. Then, we impose sparsity on the graph by connecting solely these pairs of nodes which are inside a specified temporal distance. We then formulate the video summarization activity as a binary node classification drawback, exactly classifying video frames whether or not they need to belong to the output abstract video. A graph constructed this manner (as proven in Determine 1) goals to seize long-range interactions amongst video frames, and the sparsity ensures the mannequin trains with out hitting the reminiscence and compute bottleneck. Experiments on two datasets(SumMe and TVSum) show the effectiveness of the proposed nimble mannequin in comparison with present state-of-the-art summarization approaches whereas being one order of magnitude extra environment friendly in compute time and reminiscence.

We present that this structured sparsity results in comparable or improved outcomes on video summarization datasets(SumMe and TVSum) present that VideoSAGE has comparable efficiency as present state-of-the-art summarization approaches whereas consuming considerably decrease reminiscence and compute budgets. The tables under present the comparative outcomes of our methodology, particularly VideoSAGE, on performances and goal scores. This has just lately been accepted in a workshop at CVPR 2024. The paper particulars and extra outcomes can be found here.

Motion Segmentation : Formulated as a node classification drawback

Equally, we additionally pose the motion segmentation drawback as a node classification in such a sparse graph constructed from the enter video. The GNN construction is just like the above, besides the final GNN layer is Graph Consideration Community (GAT) as an alternative of SageConv as used within the video summarization. We carry out experiments of 50-Salads dataset. We leverage MSTCN or ASFormer because the stage 1 preliminary characteristic extractors. Subsequent, we make the most of our sparse, Bi-Directional GNN mannequin that makes use of concurrent temporal “ahead” and “backward” native message-passing operations. The GNN mannequin additional refine the ultimate, fine-grain per-frame motion prediction of our system. Check with desk 2 for the outcomes.

On this part, we are going to describe how we are able to take the same graph primarily based strategy the place as nodes denote “objects” as an alternative of 1 complete video body. We’ll begin with a selected instance to explain the spatio-temporal graph strategy.

Lively Speaker Detection : Activity formulated as node classification

Determine 2 illustrates an summary of our framework designed for Lively Speaker Detection (ASD) activity. With the audio-visual information as enter, we assemble a multimodal graph and forged the ASD as a graph node classification activity. Determine 3 demonstrates the graph building course of. First, we create a graph the place the nodes correspond to every individual inside every body, and the perimeters characterize spatial or temporal relationships amongst them. The preliminary node options are constructed utilizing easy and light-weight 2D convolutional neural networks (CNNs) as an alternative of a posh 3D CNN or a transformer. Subsequent, we carry out binary node classification i.e. energetic or inactive speaker — on every node of this graph by studying a lightweight three-layer graph neural community (GNN). Graphs are constructed particularly for encoding the spatial and temporal dependencies among the many completely different facial identities. Subsequently, the GNN can leverage this graph construction and mannequin the temporal continuity in speech in addition to the long-term spatial-temporal context, whereas requiring low reminiscence and computation.

You’ll be able to ask why the graph building is this manner? Right here comes the affect of the area data. The explanation the nodes inside a time distance that share the identical face-id are linked with one another is to mannequin the real-world situation that if an individual is taking at t=1 and the identical individual is speaking at t=5, the possibilities are that individual is speaking at t=2,3,4. Why we join completely different face-ids in the event that they share the identical time-stamp? That’s as a result of, usually, if an individual is speaking others are almost definitely listening. If we had linked all nodes with one another and made the graph dense, the mannequin not solely would have required large reminiscence and compute, they’d even have turn out to be noisy.

We carry out in depth experiments on the AVA-ActiveSpeaker dataset. Our outcomes present that SPELL outperforms all earlier state-of-the-art (SOTA) approaches. Because of ~95% sparsity of the constructed graphs, SPELL requires considerably much less {hardware} sources for the visible characteristic encoding (11.2M #Params) in comparison with ASDNet (48.6M #Params), one of many main state-of-the-art strategies of that point.

How lengthy the temporal context is?

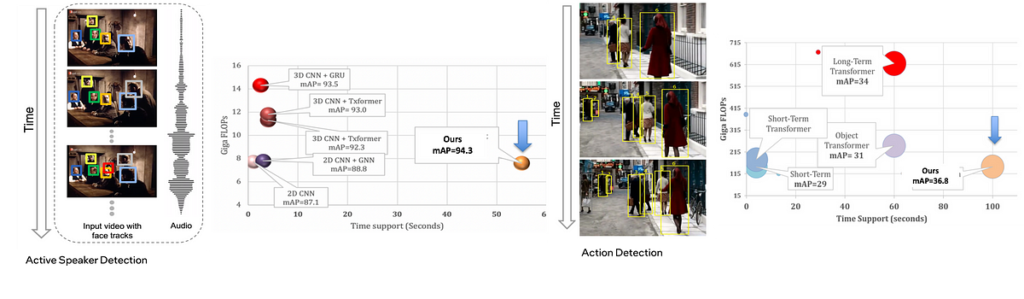

Check with determine 3 under that exhibits the temporal context achieved by our strategies on two completely different purposes.

The hyper-parameter τ (= 0.9 second in our experiments) in SPELL imposes extra constraints on direct connectivity throughout temporally distant nodes. The face identities throughout consecutive timestamps are all the time linked. Beneath is the estimate of the efficient temporal context dimension of SPELL. The AVA-ActiveSpeaker dataset comprises 3.65 million frames and 5.3 million annotated faces, leading to 1.45 faces per body. Averaging 1.45 faces per body, a graph with 500 to 2000 faces in sorted temporal order can span 345 to 1379 frames, similar to anyplace between 13 and 55 seconds for a 25 body/second video. In different phrases, the nodes within the graph might need a time distinction of about 1 minute, and SPELL is ready to successfully motive over that long-term temporal window inside a restricted reminiscence and compute finances. It’s noteworthy that the temporal window dimension in MAAS is 1.9 seconds and TalkNet makes use of as much as 4 seconds as long-term sequence-level temporal context.

The work on spatio-temporal graphs for energetic speaker detection has been printed at ECCV 2022. The manuscript will be discovered here . In an earlier blog we offered extra particulars.

Motion Detection : Activity formulated as node classification

The ASD drawback setup in Ava energetic speaker dataset has entry to the labeled faces and labeled face tracks as enter to the issue setup. That largely simplifies the development of the graph when it comes to figuring out the nodes and edges. For different issues, reminiscent of Motion Detection, the place the bottom fact object (individual) areas and tracks will not be offered, we use pre-processing to detect objects and object tracks, then make the most of SPELL for the node classification drawback. Much like the earlier case, we make the most of area data and contruct a sparse graph. The “object-centric” graphs are first created preserving the underlying utility in thoughts.

On common, we obtain ~90% sparse graphs; a key distinction in comparison with visible transformer-based strategies which depend on dense Basic Matrix Multiply (GEMM) operations. Our sparse GNNs permit us to (1) obtain barely higher efficiency than transformer-based fashions; (2) combination temporal context over 10x longer home windows in comparison with transformer-based fashions (100s vs 10s); and (3) Obtain 2–5X compute financial savings in comparison with transformers-based strategies.

We’ve got open-sourced our software program library, GraVi-T. At current, GraVi-T helps a number of video understanding purposes, together with Lively Speaker Detection, Motion Detection, Temporal Segmentation, Video Summarization. See our opensource software program library GraVi-T to extra on the purposes.

In comparison with transformers, our graph strategy can combination context over 10x longer video, consumes ~10x decrease reminiscence and 5x decrease FLOPs. Our first and main work on this subject (Lively Speaker Detection) was printed at ECCV’22. Be careful for our newest publication at upcoming CVPR 2024 on video summarization aka video highlights reels creation.

Our strategy of modeling video as a sparse graph outperformed advanced SOTA strategies on a number of purposes. It secured prime locations in a number of leaderboards. The listing contains ActivityNet 2022, Ego4D audio-video diarization problem at ECCV 2022, CVPR 2023. Source code for the coaching the previous problem profitable fashions are additionally included in our open-sourced software program library, GraVi-T.

We’re enthusiastic about this generic, light-weight and environment friendly framework and are working in the direction of different new purposes. Extra thrilling information coming quickly !!!

[ad_2]

Source link